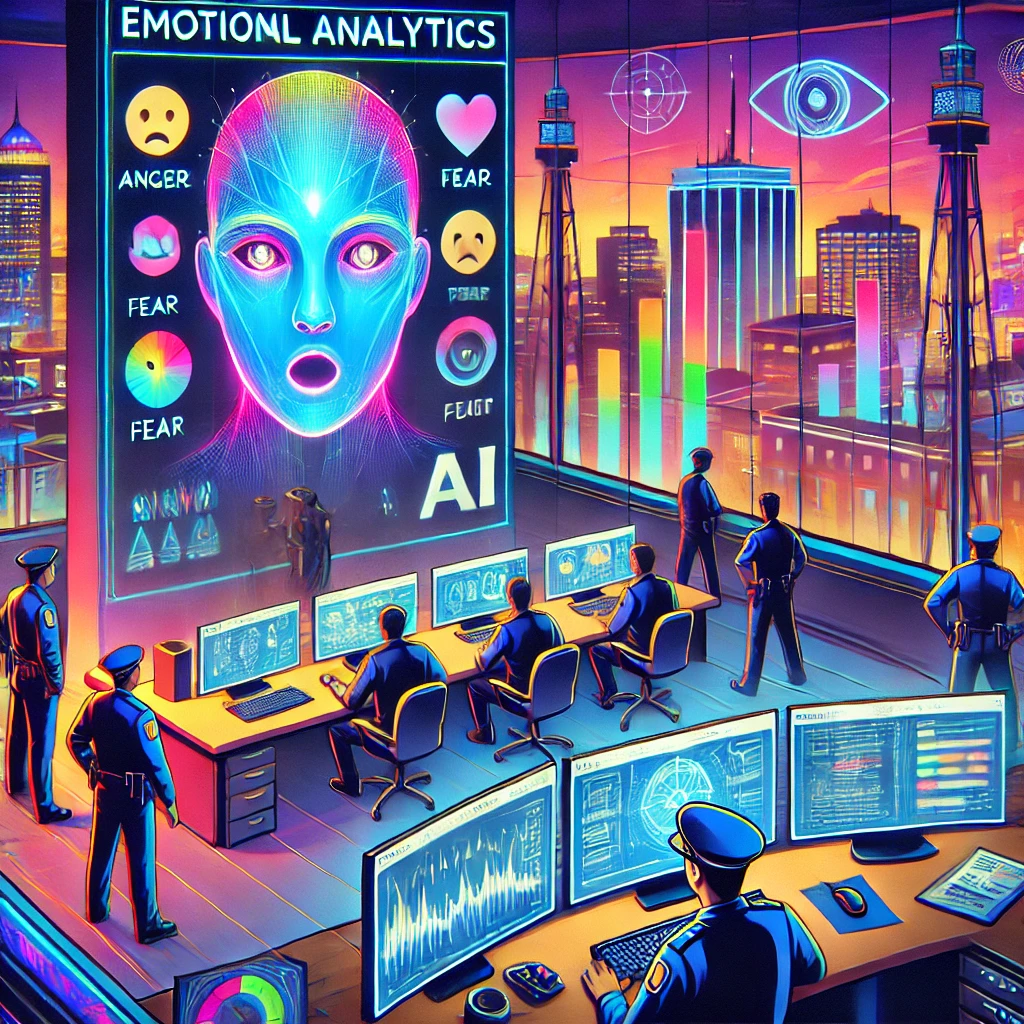

Emotional AI in policing: A promise or a threat to civil liberties?

Emotional AI leverages affective computing to identify and predict human emotional states using data such as micro-expressions, voice modulation, and physical behavior. The premise is that by understanding emotions and behaviors, AI can assist law enforcement in preempting criminal actions. However, emotions are inherently complex, subjective, and influenced by cultural, environmental, and situational contexts, making accurate interpretation highly challenging.

Among the many advancements in AI, Emotional AI stands out for its promise to analyze and predict human emotions, offering potential breakthroughs in crime prevention and urban surveillance. However, this seemingly revolutionary technology also raises profound ethical, practical, and societal concerns. How far should we go in granting machines the power to interpret and act on human emotions?

The study "We Have to Talk About Emotional AI and Crime," by Lena Podoletz, published in AI & Soc 38, 1067 - 1082 (2023), delves into this critical question. By examining the deployment of Emotional AI in policing, the research highlights its potential applications, inherent limitations, and the far-reaching implications for societies that value individual rights and freedoms. As we stand at the intersection of innovation and ethics, this study invites us to rethink the role of AI in enforcing laws and safeguarding civil liberties.

Emotional AI: Beyond detection to prediction

Emotional AI leverages affective computing to identify and predict human emotional states using data such as micro-expressions, voice modulation, and physical behavior. The premise is that by understanding emotions and behaviors, AI can assist law enforcement in preempting criminal actions. However, emotions are inherently complex, subjective, and influenced by cultural, environmental, and situational contexts, making accurate interpretation highly challenging.

Podoletz notes that Emotional AI systems are not merely tools for analysis; they also influence law enforcement practices and policies. For instance, Emotional AI might be integrated into surveillance systems like facial recognition cameras, crowd monitoring systems, or even interrogation techniques. Despite these potential applications, their reliability, ethical implications, and unintended consequences demand deeper scrutiny.

Applications of Emotional AI in policing

Predictive Policing and Crime Prevention

Emotional AI has the potential to strengthen predictive policing strategies by analyzing patterns of behavior that indicate potential criminal intent. For example, individuals exhibiting heightened aggression or fear in certain environments could be flagged for intervention. While this approach could theoretically deter crimes before they occur, its underlying assumption—that emotions directly correlate with criminal behavior—is problematic. Misinterpretations could lead to false positives, stigmatizing individuals based on ambiguous data.

Surveillance in Urban Spaces

Cities increasingly deploy surveillance technologies, and Emotional AI could amplify these capabilities. By integrating AI with systems like CCTV cameras or drones, authorities might monitor public spaces for behaviors deemed suspicious or hostile. For example, Emotional AI could be used during protests to identify potential agitators. However, such widespread surveillance risks creating an atmosphere of constant monitoring, undermining the rights to privacy and free assembly. The chilling effect of such surveillance could stifle democratic freedoms and discourage civic participation.

Lie Detection in Interrogation

One of the more direct applications of Emotional AI is in lie detection during interrogations. By analyzing micro-expressions, vocal changes, and physiological signals, AI systems could evaluate a suspect’s truthfulness. While this application might appear promising, it inherits the limitations of traditional polygraph tests, which are often criticized for their inaccuracy. Emotional AI, similarly, struggles to interpret human behavior universally, as cultural and individual differences heavily influence emotional expressions.

Challenges and ethical dilemmas

Podoletz highlights several critical challenges that Emotional AI poses in policing:

Reliability and Accuracy

Despite advancements, the accuracy of Emotional AI systems remains far from reliable. Studies reveal that emotion recognition algorithms often have error rates of up to 40%, with accuracy heavily dependent on the cultural and demographic context of the data used for training. For example, an expression interpreted as anger in one culture might signify concentration in another. Such inaccuracies raise the potential for misjudgments that could have life-altering consequences.

Algorithmic Bias and Inequality

Emotional AI systems are prone to biases embedded in their training datasets. If datasets reflect societal inequities - such as racial, gender, or socioeconomic biases - these biases will inevitably influence the AI’s decisions. This creates a risk of disproportionately targeting marginalized groups, exacerbating existing inequalities within the justice system.

Privacy and Surveillance Overreach

The deployment of Emotional AI in public spaces raises significant privacy concerns. The ability to infer emotions and intentions from surveillance data represents an unprecedented level of intrusion. Such monitoring could lead to profiling, discrimination, and the erosion of personal freedoms, particularly in settings where individuals expect a degree of anonymity.

Accountability and Transparency

A major challenge with Emotional AI is determining accountability. When AI systems produce erroneous or biased results, who is responsible—the developers, the law enforcement agencies using the technology, or the policymakers who approved its deployment? Furthermore, the “black box” nature of AI systems makes it difficult for stakeholders to understand and challenge decisions, undermining public trust.

Societal Implications in Liberal Democracies

In liberal democracies, values such as privacy, freedom of expression, and the right to protest are foundational. Emotional AI, when misused, has the potential to undermine these principles. The study warns against the normalization of surveillance technologies that prioritize control over individual rights, particularly in societies that champion civil liberties.

Recommendations for responsible use

Podoletz argues that any deployment of Emotional AI in policing must be critically assessed to balance its potential benefits against ethical and societal risks. Key recommendations include:

-

Rigorous Validation and Auditing: Emotional AI systems should undergo thorough testing to ensure accuracy across diverse populations. Independent audits must assess the fairness and reliability of algorithms before deployment.

-

Transparent Policies: Clear guidelines should define how Emotional AI is used, who has access to the data, and how decisions are made. Transparency fosters public trust and enables informed oversight.

-

Human Oversight: Emotional AI should serve as a supplementary tool, not a replacement for human judgment. Decisions based on AI analyses must be verified by trained professionals to mitigate errors.

-

Public Engagement: Policymakers should engage with the public to address concerns and ensure that Emotional AI aligns with societal values. Public dialogue can help establish ethical boundaries for its use.

-

Restricting Use in Sensitive Areas: The deployment of Emotional AI in politically sensitive contexts, such as protests or public assemblies, should be strictly regulated to prevent abuses of power.

- FIRST PUBLISHED IN:

- Devdiscourse